Ten practices to reduce incident escalations from on-call teams to developers.

Claude Code Workshop & Best Practices

Speaker: Evgeny Potapov, ApexData co-founder & CEO

Hi everyone. For those watching the online translation, I'm sorry in advance—this is our first time trying this, so there might be problems, but I hope we'll make it to the end. We did the release on Friday night, so what can go wrong? I think it will be okay.

My name is Evgeny Potapov. For the past twenty years, I was working in tech, doing software development and Site Reliability Engineering (SRE). In 2008, I started a company that was doing DevOps services and software development for applications that required high availability and had a huge amount of visitors. One of our sites had around 60 million users per day.

In 2008, 2010, 2012, and even 2018, you usually needed a ton of people and a huge amount of time to build things—especially if it was a huge microservice application requiring high availability, hundreds of microservices, and a 50-person development team. But now things are different. Now a team of three developers can build a "lovable" application or any SaaS application, and they get it done in three months.

A lot of people were asking me how we are doing "AI-first" software development in our company. I promised to share my knowledge, and since so many people asked, we decided to make a meetup where I can show the practices, explain generally what's happening now in the area of LLM coding, understand how we got here, and discuss the best practices to work in AI coding without deploying stuff that will be hacked in one hour or go down in two.

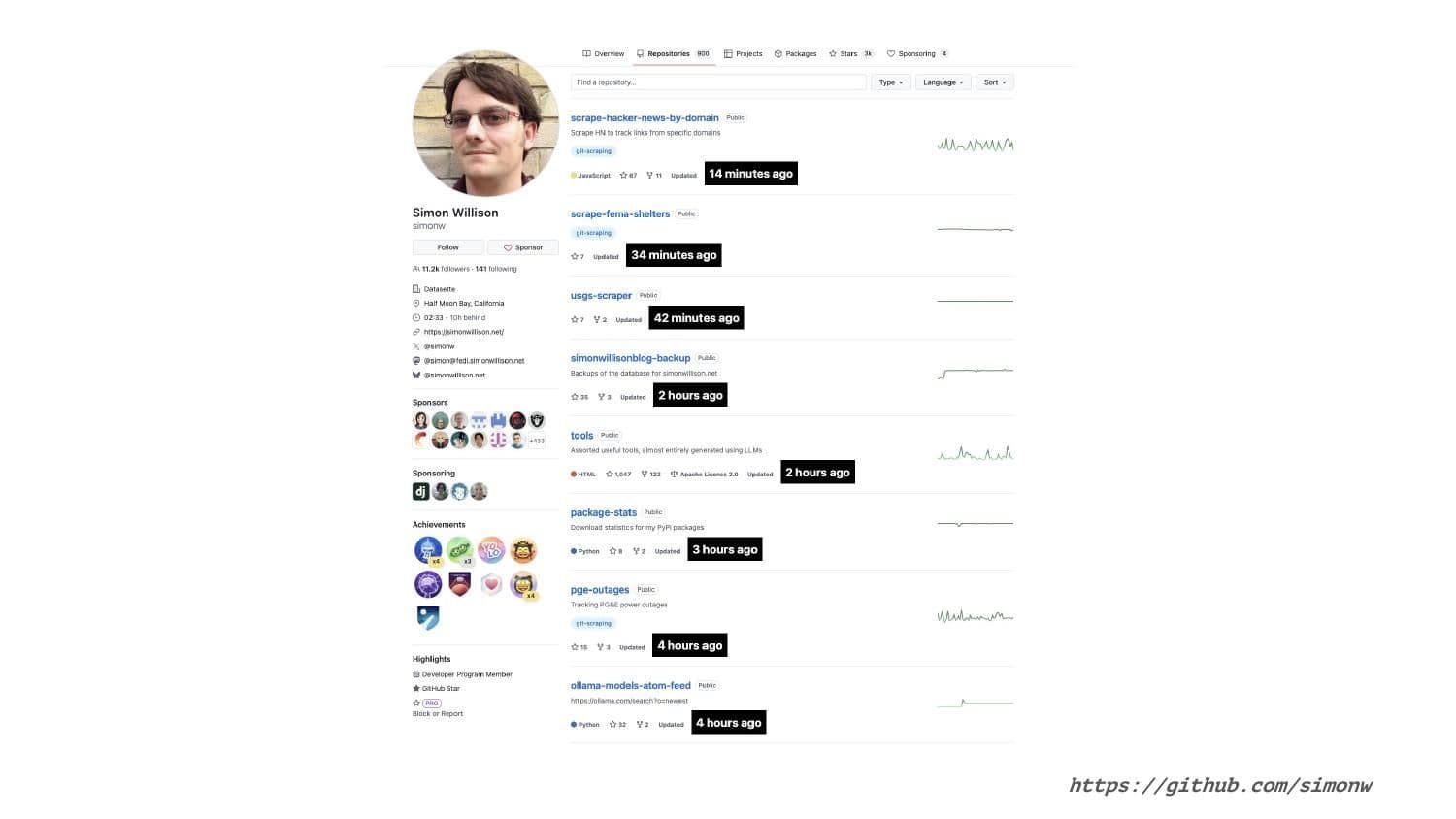

Let's look at where we are now. There are many great software developers who previously did amazing open-source projects. Take Simon Willison, for example, one of the creators of Django.

If you go to his repository, you'll find that eight repositories were all updated within the last four hours. Some of them 14 minutes ago, 34 minutes ago. What is happening? Why is he able to do this, and why do people use it without it being buggy?

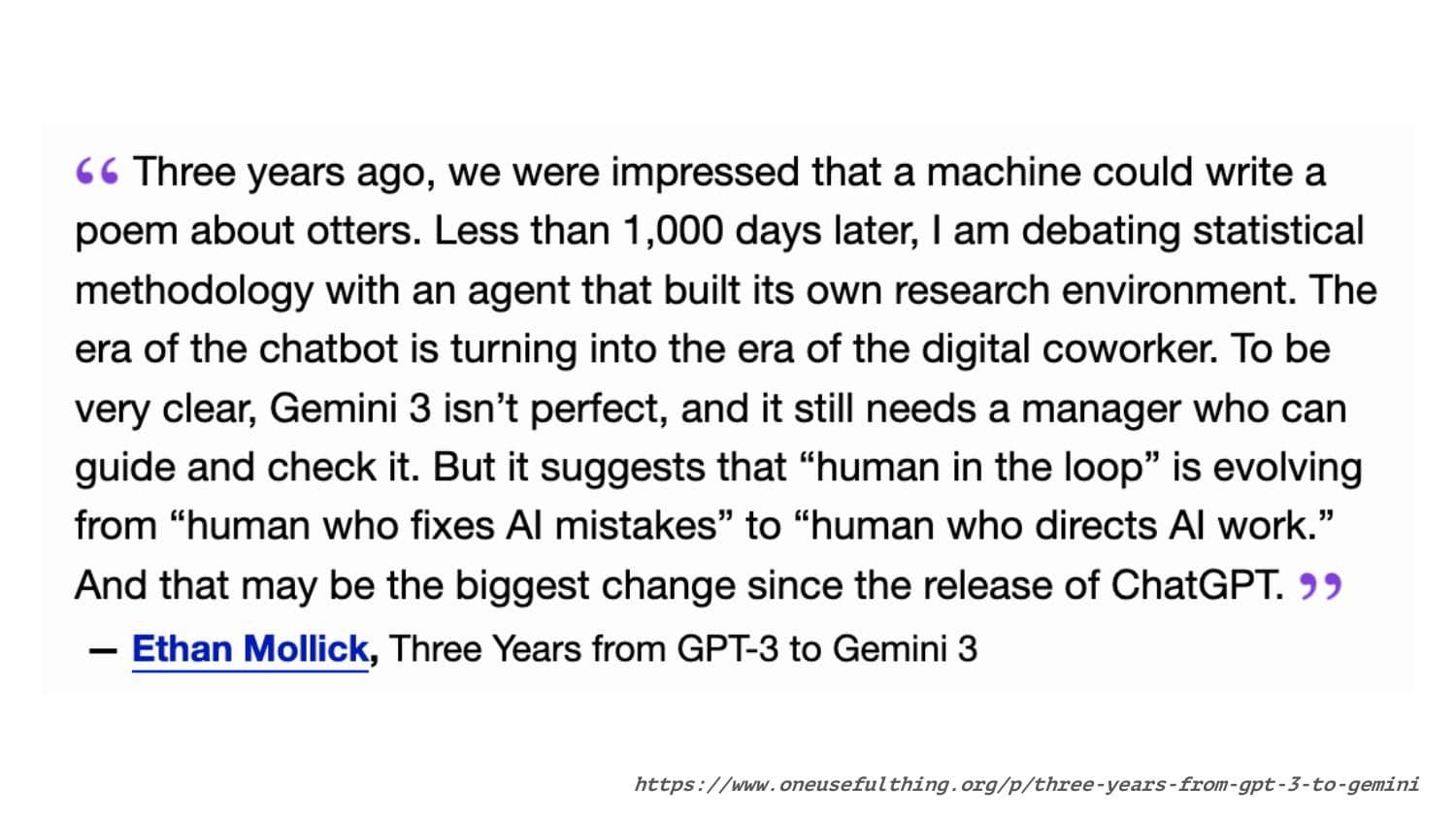

Another post I recently saw explains everything that happened within the last three years. You probably remember when ChatGPT arrived: you went to the chat, typed "write me a poem," and magic happened. Now, scientific researchers are talking about how LLM systems are writing scientific papers—or at least correcting unclear things and helping to improve research.

Three years ago, it was a chat. Now, it's scientific papers. The main surprise for everyone who saw how LLMs hallucinated before is that now it is much more reliable.

How did we get to this place? It’s basically just three years of practical implementation and a couple of years of theoretical work before that. We went through four ages of AI development and LLM models.

The first and most important thing was a single paper published on June 12, 2017, titled "Attention Is All You Need." A group of researchers proposed a new network architecture called Transformers. If you are amazed by how LLMs are working right now, you need to understand it's incredible that everything we started with regarding LLM models came from this single paper. Until that day, there were no such approaches to large language models.

From there, a couple of things started happening:

2017: "Attention Is All You Need" paper.

2018: OpenAI creates GPT. It wasn't public; they shared it with a few Bay Area startups. It was an API where you could send messages and get text answers.

2019: OpenAI GPT-2. This was a revolution. Many applications appeared providing a chat interface, and it felt like magic. It was reliable enough to generate coherent text. Today, you can run a model the size of GPT-2 on your local Mac, but back then, it was huge.

2020: OpenAI GPT-3.

2021: Something very important happens. People realized GPT-3 could complete code. Companies approached OpenAI to fine-tune a model on codebases. OpenAI released Codex (GPT-3 fine-tuned on software development).

The same month OpenAI Codex was released, GitHub announced Copilot with Codex in the backend. By now, GitHub Copilot is a commodity; everyone uses it.

Guillermo Rauch, the founder of Vercel, noted on the day Copilot was released that it provides code that is uniquely generated and has never been seen before. He called it "magic" and "the future." Obviously, three years later, Copilot is not a revolution anymore—it just does simple autocompletion.

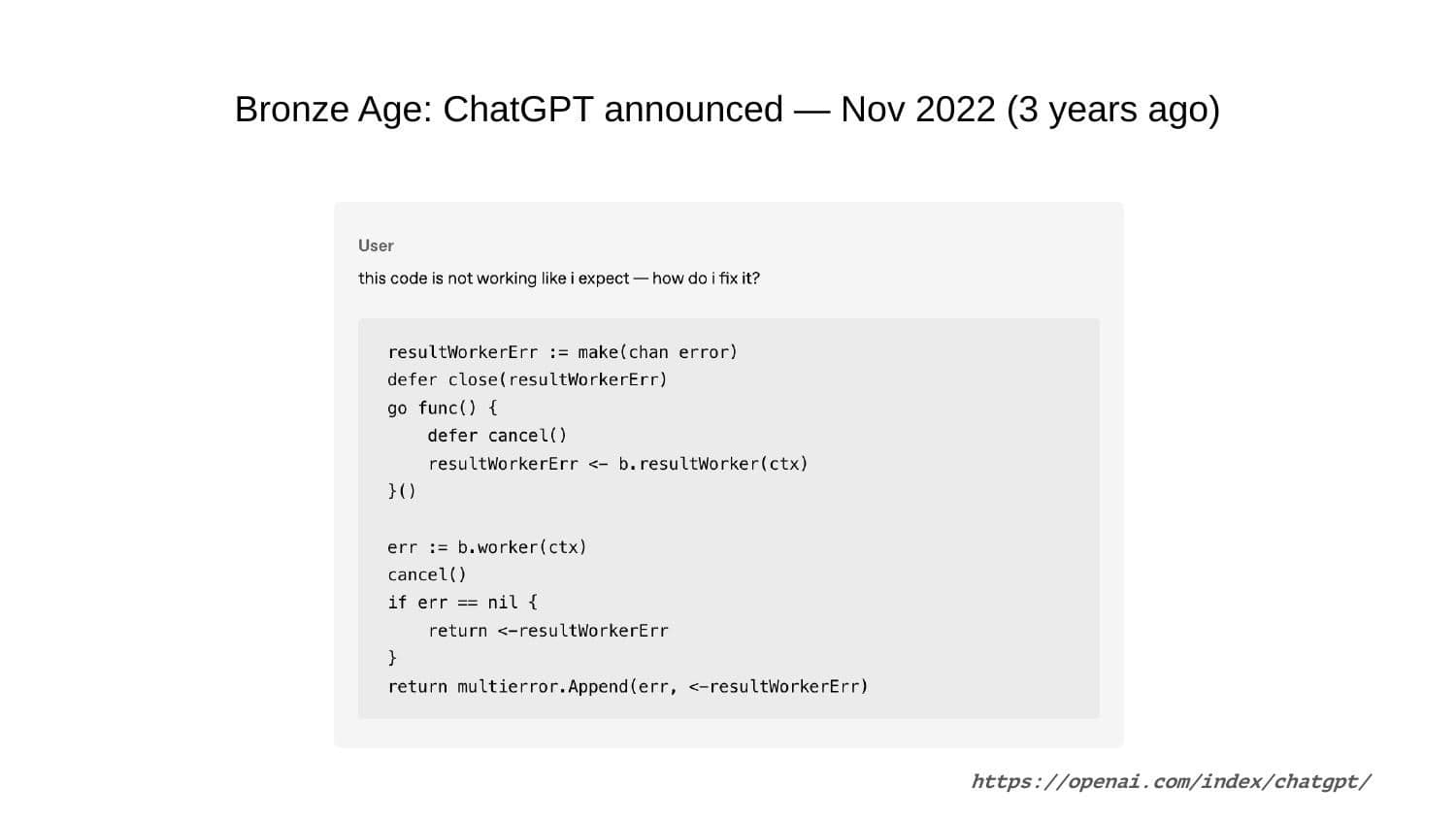

In November 2022, OpenAI released GPT-3.5 and introduced ChatGPT. While most saw a chat bot, we understood that one of the main goals of the GPT-3.5 release was coding assistance.

Let's look at the official ChatGPT announcement. You could put code in the chat and say, "This code is not working like I expect, how do I fix it?"

But back then, the response was often, "It's difficult to say what's happening without more context." It couldn't do much about 10 lines of code. The user had to elaborate.

Once the user clarified, ChatGPT would find the problem (e.g., a channel never being closed in Go) and fix it. In 2022, this was magic. Today, we would say the quality of such answers is poor—you can't build a whole system with it. But three years ago, it was a revolution.

Then came GPT-4 in March 2023. It could draw things, write more code, and handle more context. The model became very focused on coding.

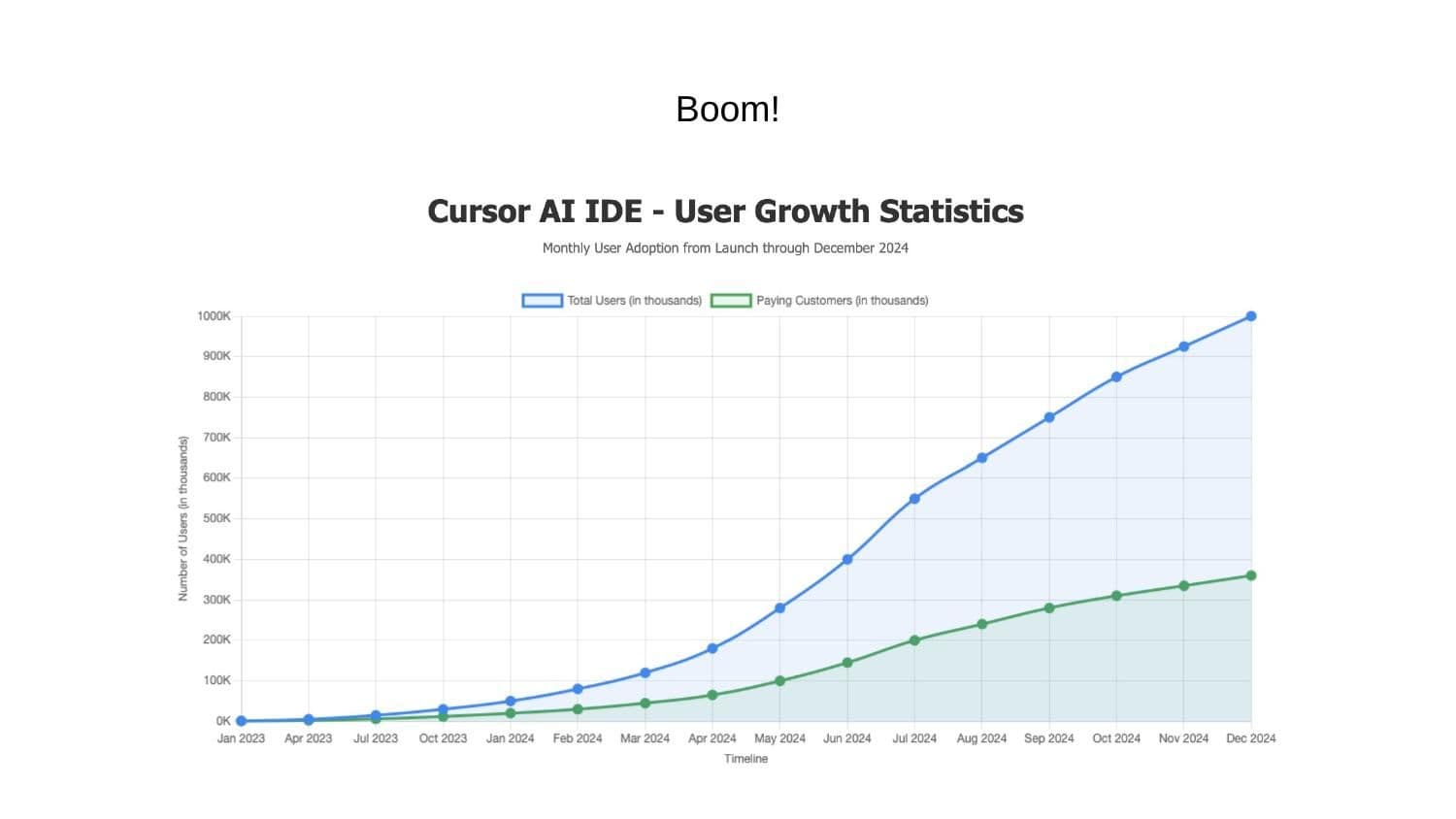

The same month, Cursor was released. Initially, it was just a "weird tool"—basically VS Code with a chat box. But you could highlight a problem in a file and ask it to fix it. This was already a big improvement over copy-pasting into a browser chat. You could refactor and introduce changes directly. However, Cursor didn't get massive adoption immediately because people didn't trust LLMs; they hallucinated too much.

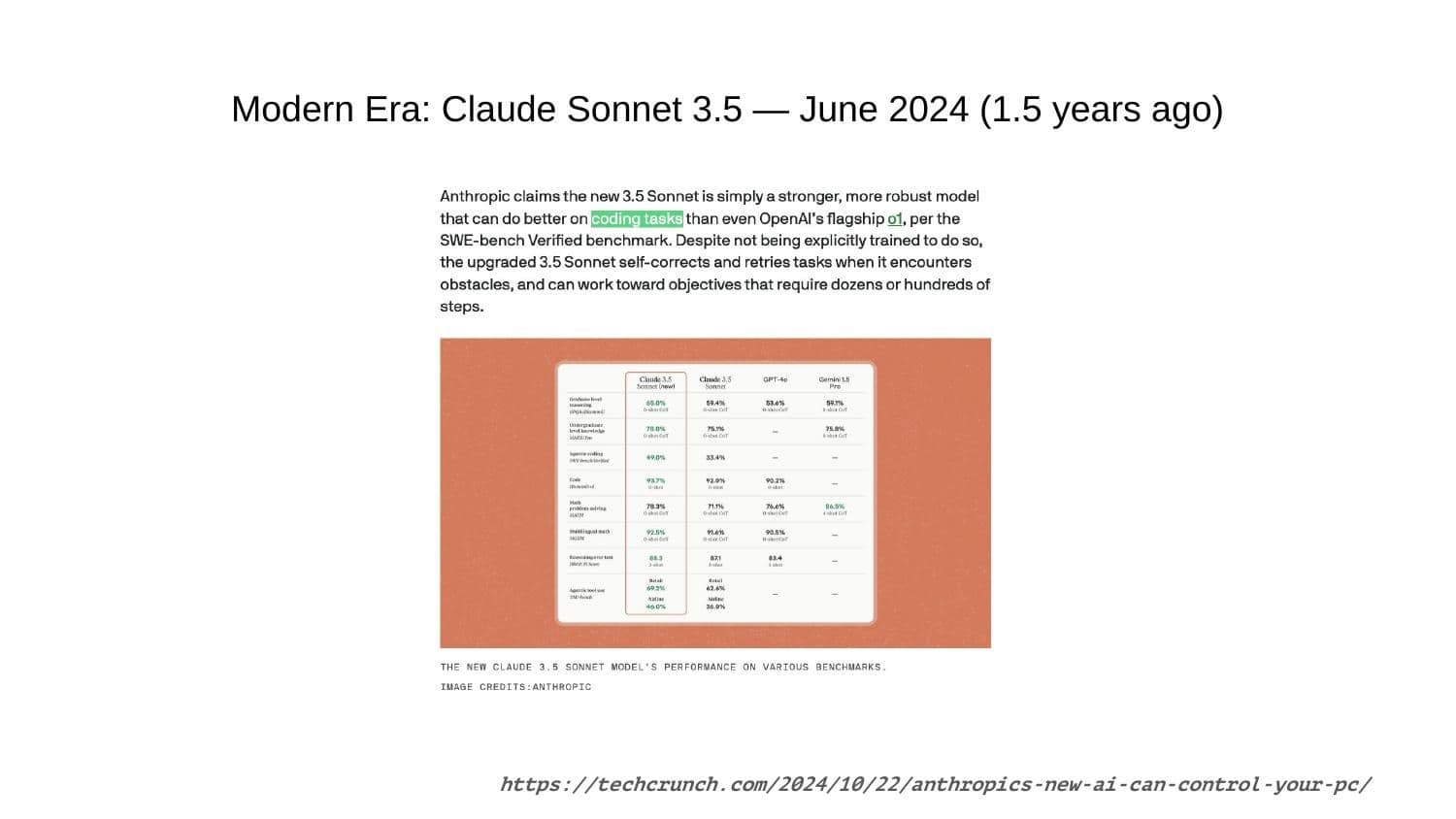

Now we come to something really revolutionary. In June 2024, Anthropic (founded by former OpenAI employees) focused strictly on the coding experience and incorporated reasoning functionality.

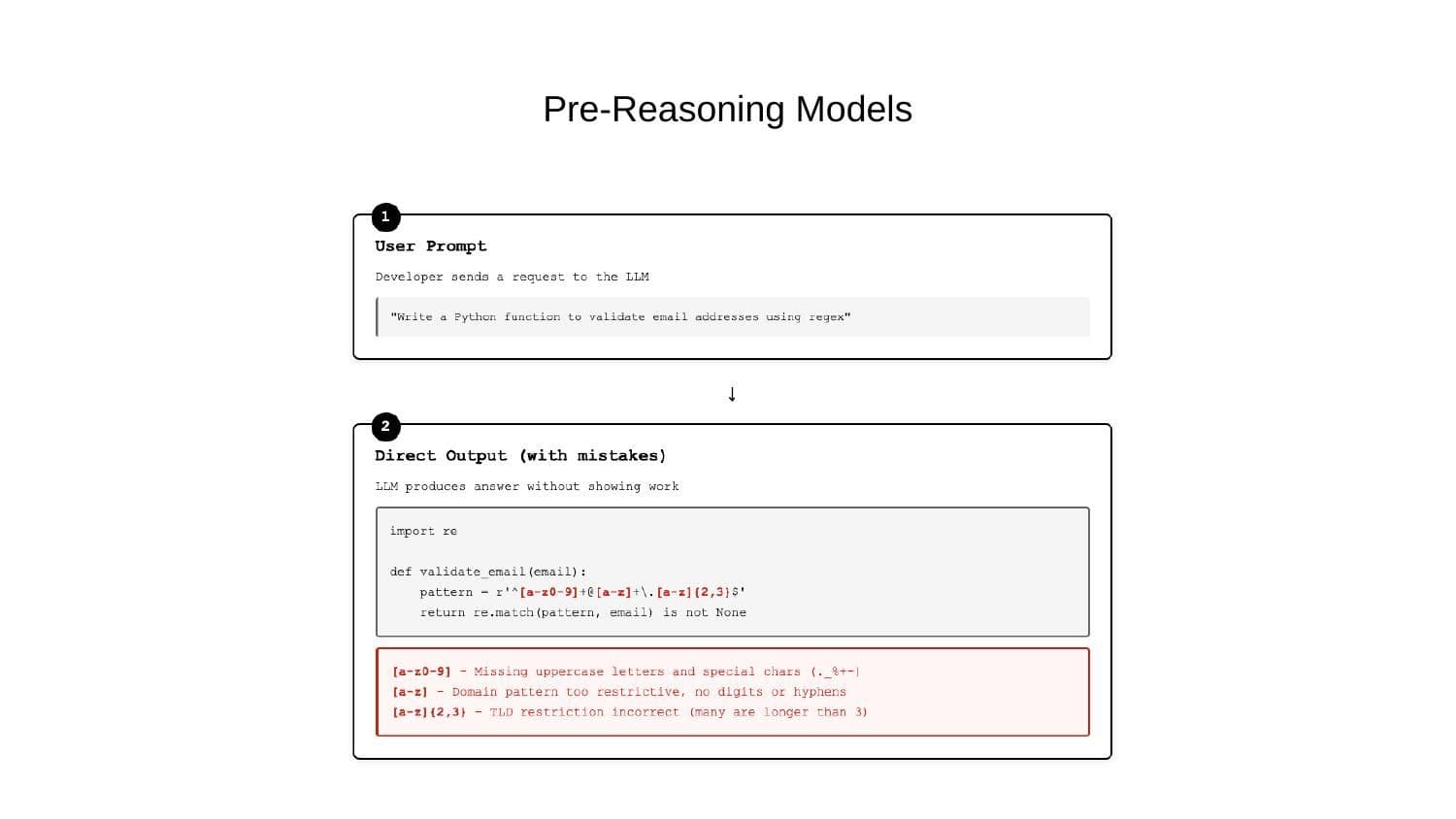

What is a reasoning model? Previously, you typed a prompt, and the LLM immediately tried to predict the next symbols. With code, if you just predict symbols, you get errors.

With reasoning models, instead of providing the result immediately, the model interacts with itself in a loop.

Developer: "Write a Python function to validate a mail address using regex."

LLM (Internal): It analyzes the request. Language: Python. Task: Email validation. Method: Regex.

It plans the steps: import re module, define regex, create function. It executes the plan mentally before generating the final code. This cycle allows the LLM to break down tasks and extend the prompt to an ideal state, making the code much more reliable.

When Claude 3.5 Sonnet arrived, Cursor adopted it, and their user base exploded. You could finally rely on the code changes. Knowledge bases also expanded—models scrape the internet, buying private codebases to learn. Two years ago, you couldn't reliably write Go code; now you can.

Then came AI Agents. There is a lot of buzz about "agentic AI."

Anthropic defines it simply: "Agentic models are agents using tools inside a loop." We have the reasoning loop, and now we provide an interface for tools.

Instead of simply providing text code, the model can now call a tool. This led to the release of Claude Code.

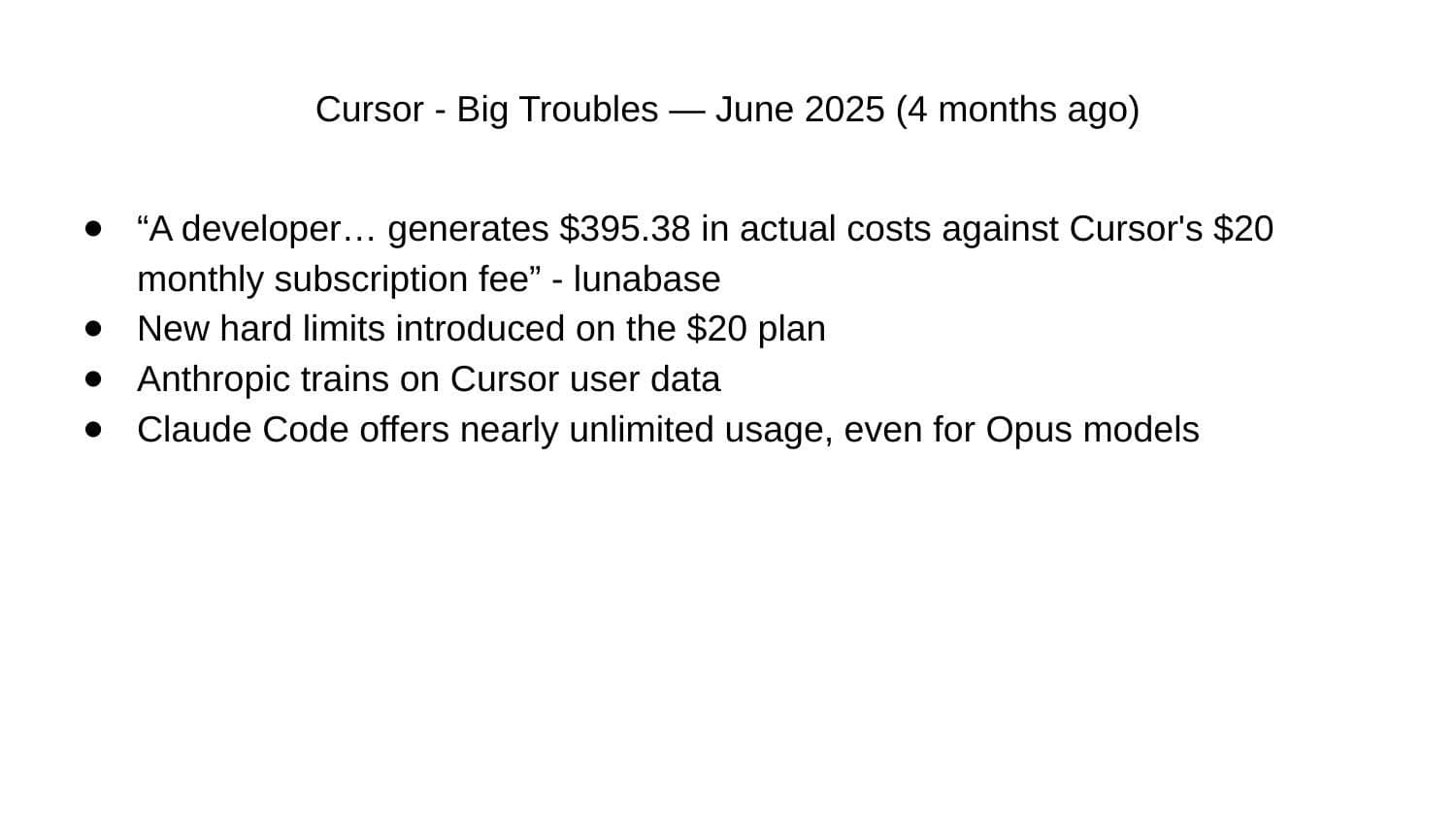

Before this, people wondered if Anthropic would build their own solution to compete with Cursor. Cursor was burning cash—users paid $20/month, but the average cost to execute their requests was nearly $400/month. When Anthropic released Claude Code, they had trained on all the interactions Cursor users had with their API.

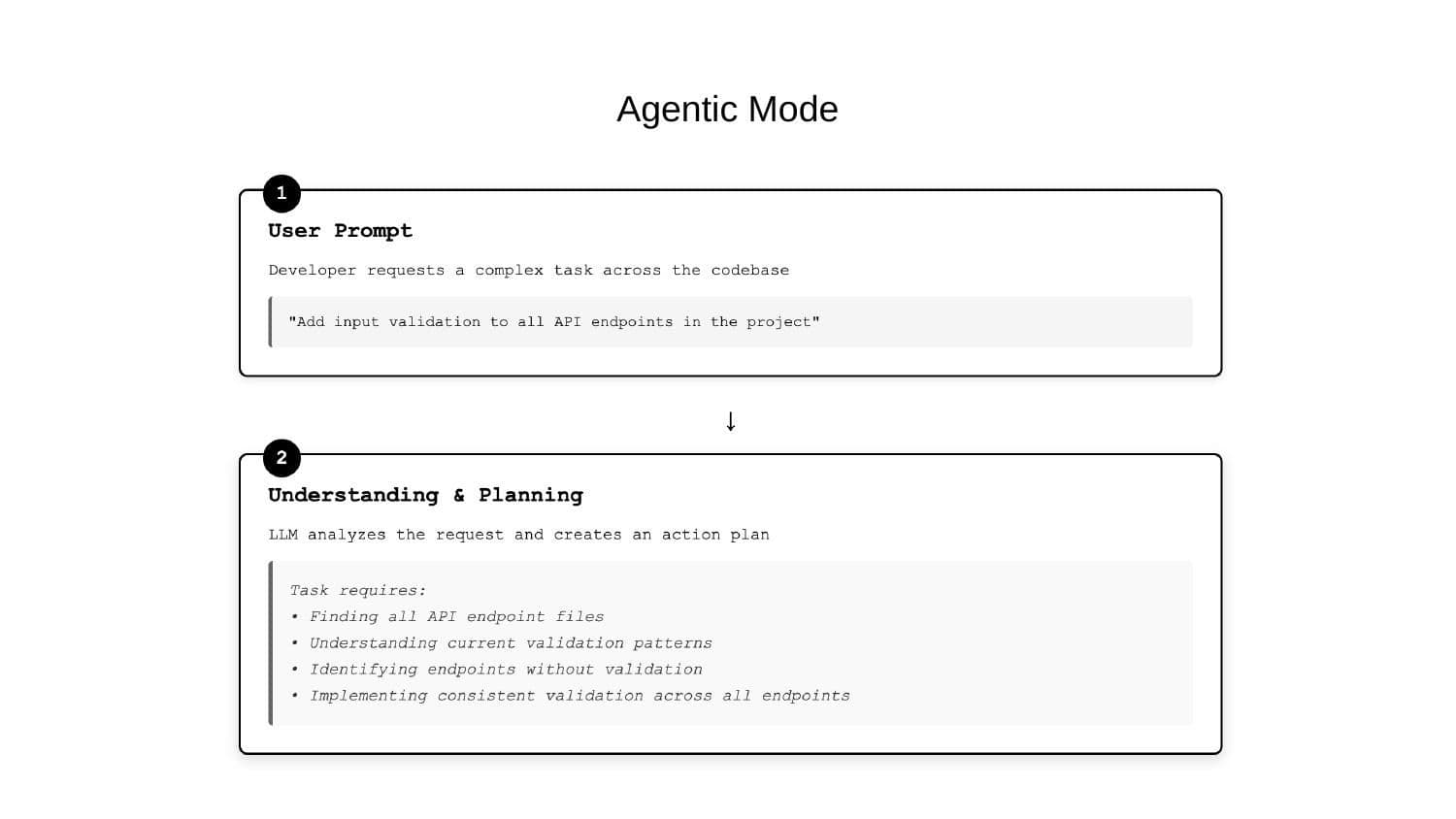

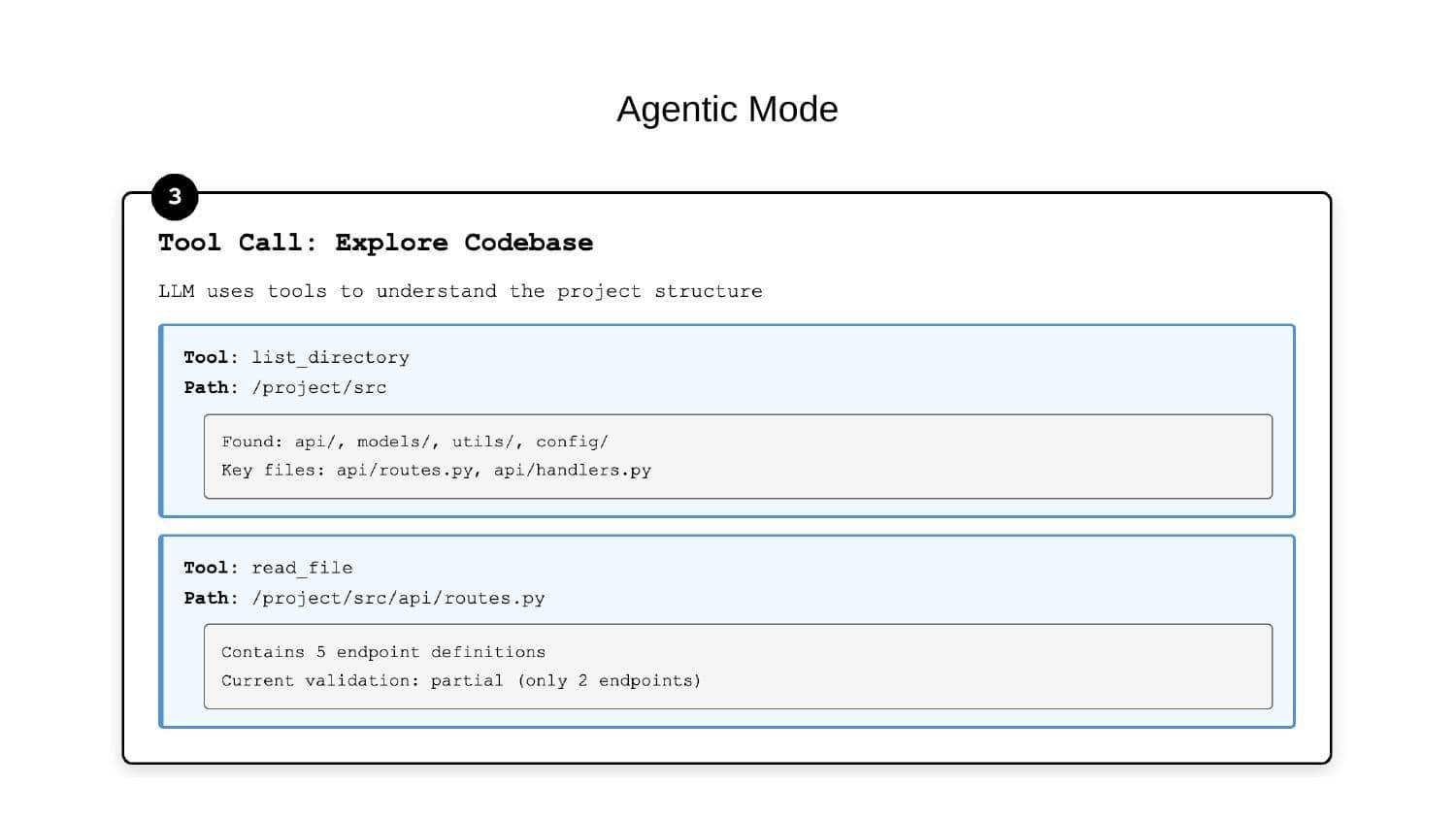

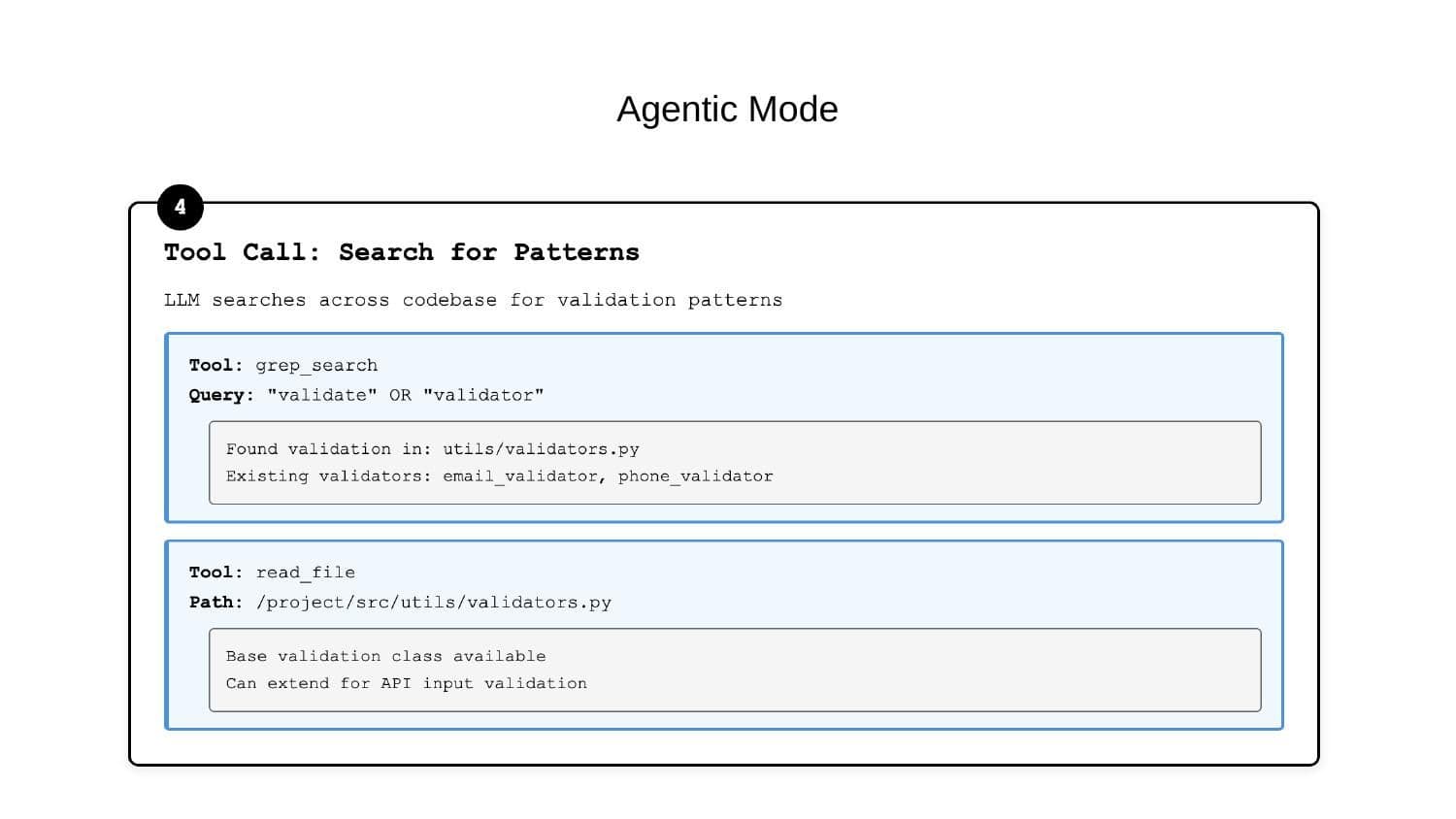

How does it work? Unlike Cursor, which changes single files, agentic tools work on a task level.

User: "Add input validation to all endpoints in the project."

LLM: Analyzes the request, understands it needs to find all API endpoints.

It calls a tool: list_directory. It finds the files. It calls read_file. It puts the source code into the context window (which is now huge).

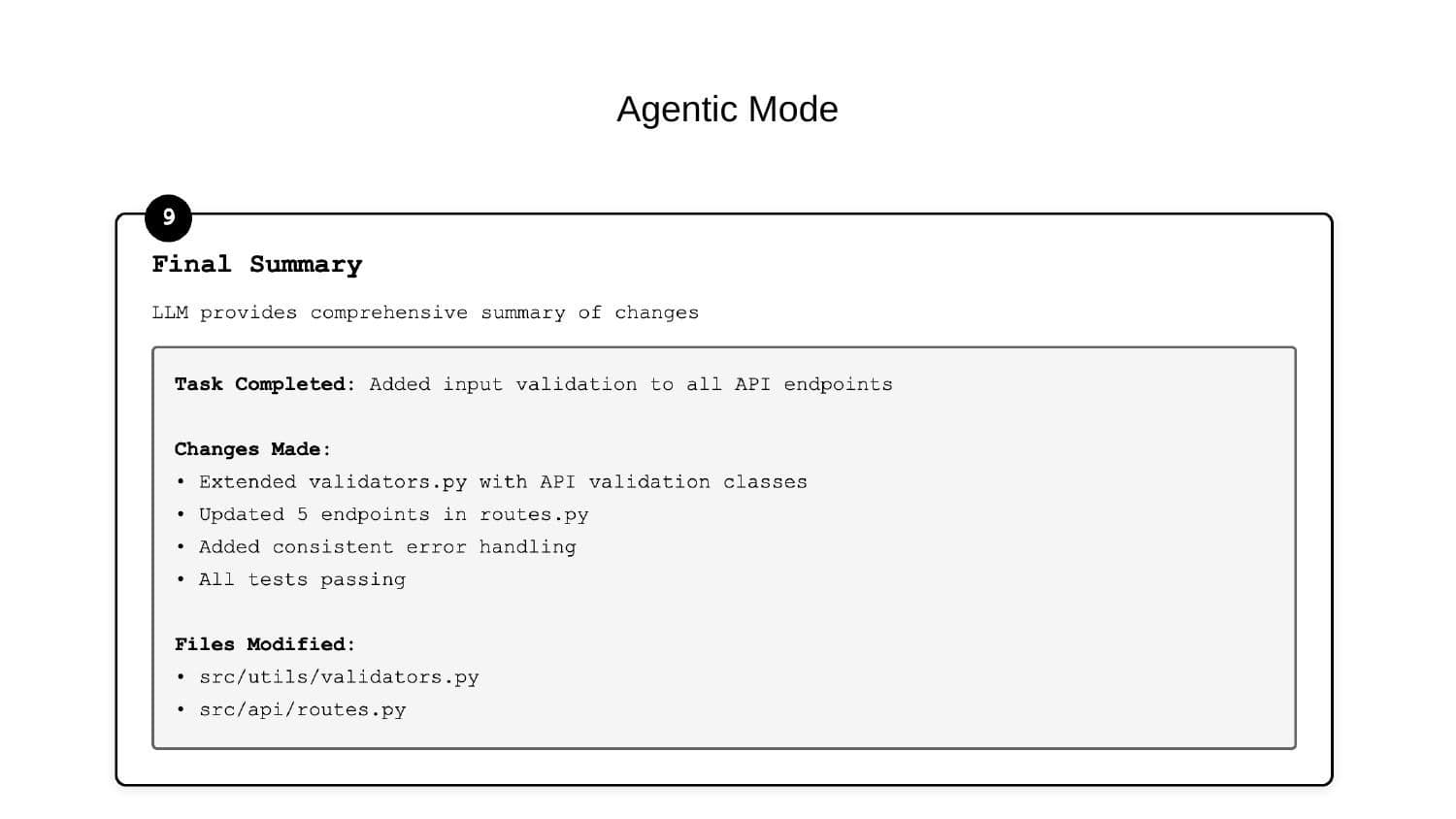

It reasons, decides to extend the validator class, calls edit_file, updates the endpoints, and—crucially—runs tests.

Finally, it validates the code. It asks itself to do a code review. If bugs are found, it fixes them. It can even provide a diff to the IDE instead of rewriting the whole file.

Cursor got in trouble because of the costs. They introduced hard limits. Meanwhile, Anthropic released Claude Code with basically unlimited usage (initially) for the same price point to capture the market.

This changed everything. People started running Claude Code on Mac Minis 24/7 to implement backlogs of tasks.

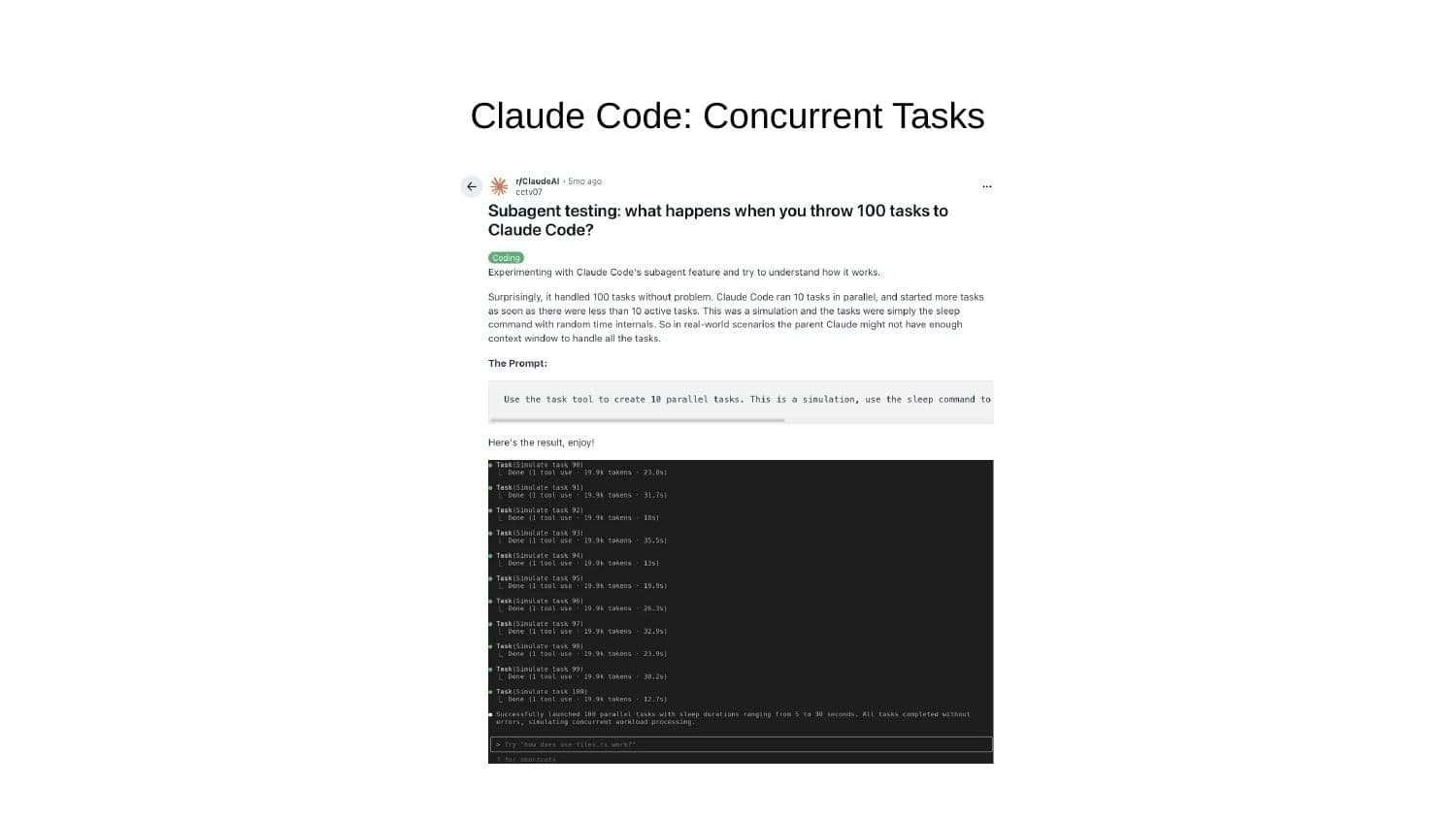

Claude Code introduced Sub-agents. Instead of hitting context limits with one model, it spawns sub-agents. You can generate a prompt, deploy a thread with another model, and run 3, 5, or 10 tasks concurrently.

Andrej Karpathy called this "Vibe Coding." You work with the whole codebase autonomously. You write a simple prompt, and the AI handles the rest.

However, this "vibe" has a downside. It allows junior developers (or people who don't know how to code) to deploy stuff they don't understand—security issues, reliability problems, unmaintainable code.

There are many tools now: Bolt, Lovable, Replit, etc.

How do we, as developers, improve performance and reliability using these tools?

We still need humans.

Architectural Intuition: You need deep knowledge to know what to skip and how to design systems.

Review: You must review the changes. If you don't, the model will eventually introduce a bug it doesn't understand.

System-level Thinking: LLMs tend to agree with you ("You are absolutely correct!"). You need to provide the critical thinking.

Two critical things you need when working with Claude Code:

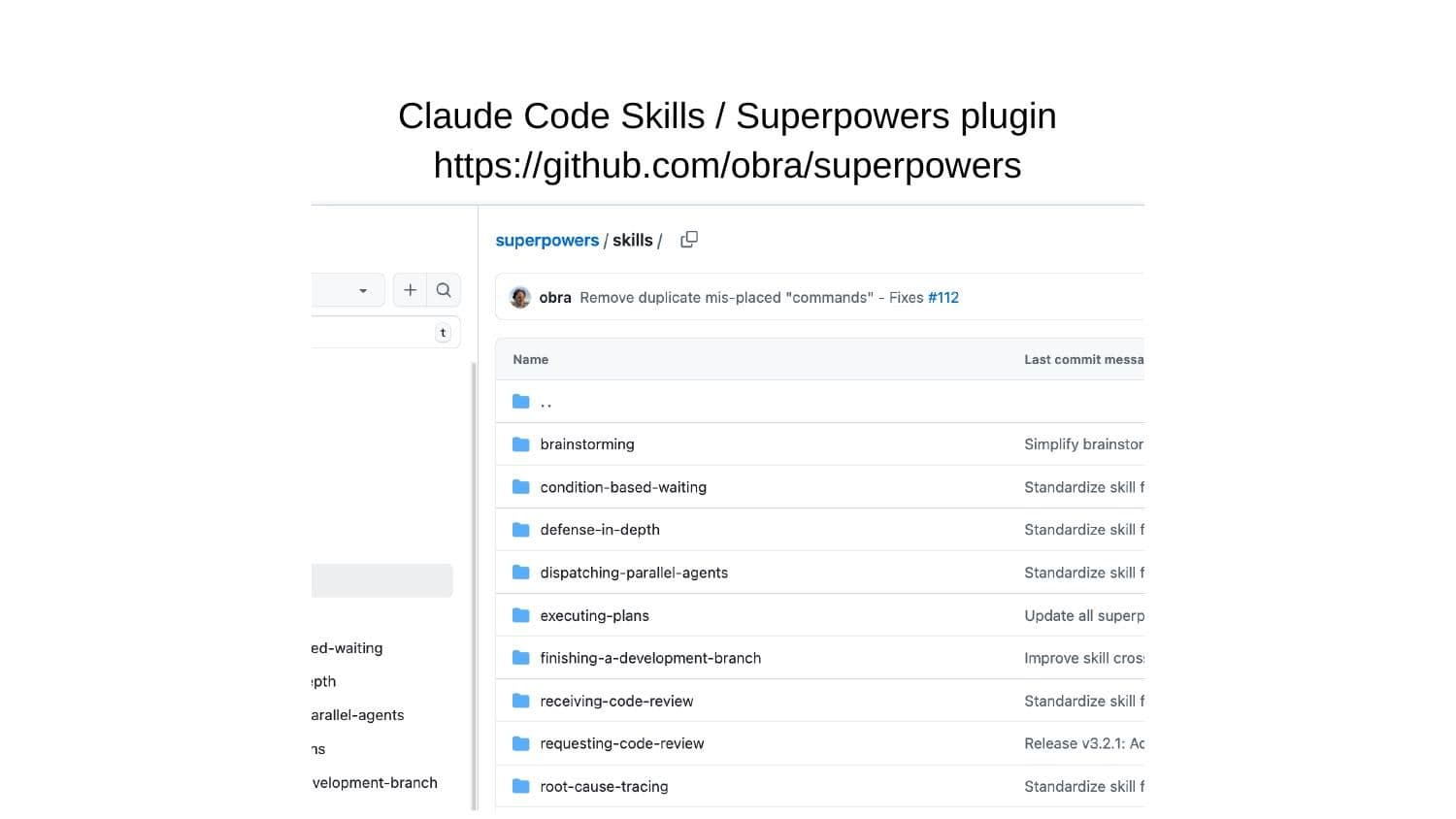

Plugins/Skills: Claude Code released a plugin system. You can inject additional prompts that run as sub-agents. For example, a "Code Review" skill. When requested, it loads a specific, detailed prompt on how to review code and runs it in a separate context.

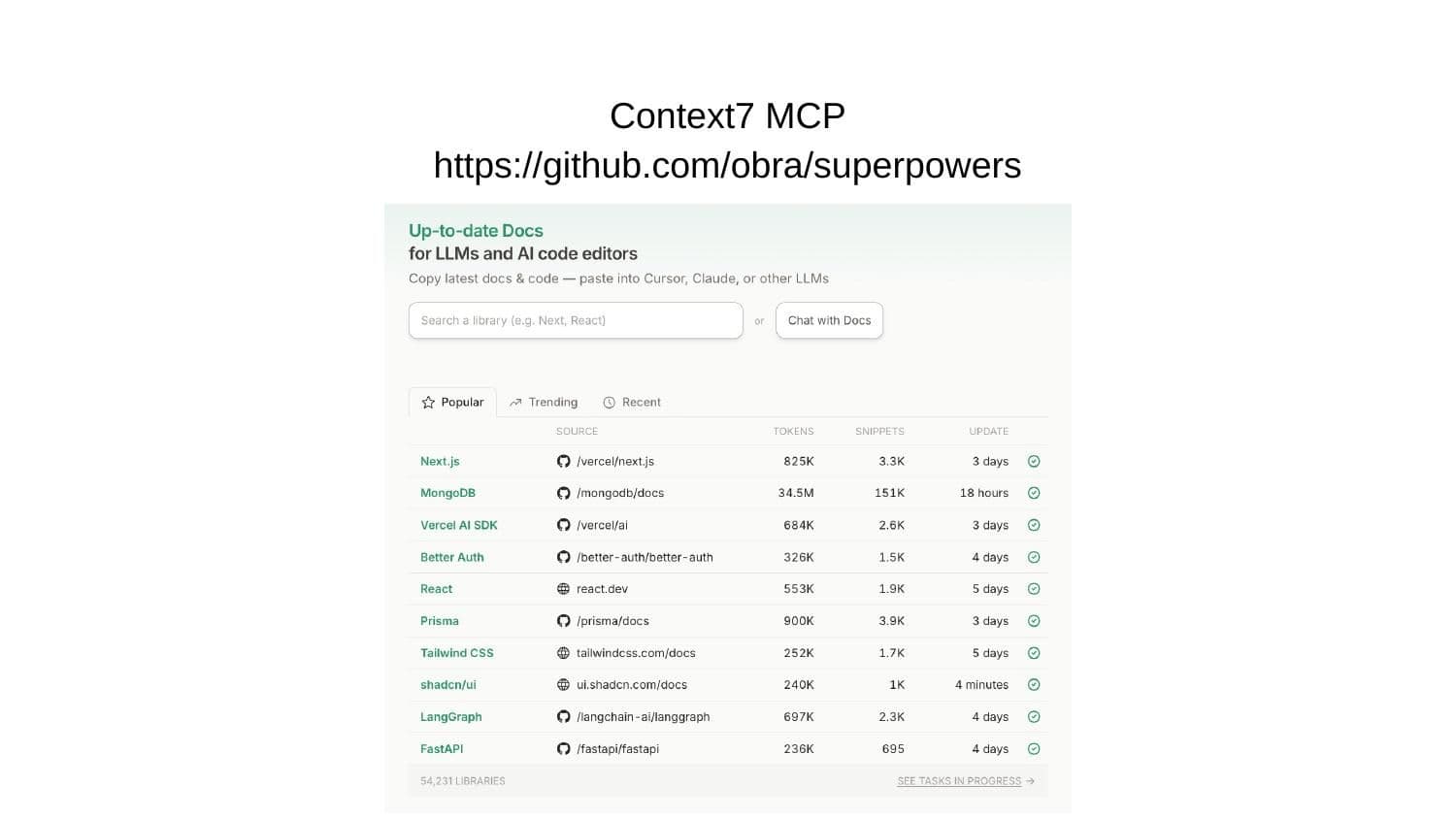

MCP (Model Context Protocol): Specifically, Context7. Models hallucinate on new libraries (e.g., trying to use NextAuth v3 syntax for v4). You can connect an MCP that fetches up-to-date documentation. Context7 parses docs from 10,000 projects into markdown. When the IDE needs info on a library, it queries the MCP instead of hallucinating.

(Note: The speaker proceeds to do a live demo. Below is a summary of the actions and insights).

Demo Workflow:

Initialization: The speaker starts claude in an empty directory.

Checking Skills: He runs /plugin to ensure "superpowers" are installed.

TDD First: He instructs Claude: "I want you to do TDD (Test Driven Development) first approach. Brainstorm what this project is going to be based on. Ask me questions. Use superpowers."

Brainstorming: The model loads the brainstorming skill. Instead of implementing a vague request immediately (which leads to hallucinations), it clarifies.

Claude: "What is the topic?"

User: "Simple e-commerce website."

Claude: "What language?"

User: "React for frontend, Python (using uv) for backend, SQLite for DB."

Planning: Claude creates a detailed plan. It does not write code yet. It creates a plans folder.

Execution: It asks to run the plan using sub-agents. It initializes the git repo (handling the user's laziness by doing commits and pushes). It starts implementing task by task: project structure, database schema, tests.

Database Design: A great feature is asking Claude to design the schema and verify it. If you miss a foreign key, it asks for confirmation.

The "Slot Machine" Effect: The developer often just hits "Enter" (confirm) or "Escape" (stop if it goes wrong). It’s a loop of reviewing plans and hitting Enter.

Crucial configuration:

CLAUDE.md: Create a file named CLAUDE.md in the root. Claude reads this first.

Project Context: "You are developing an e-commerce website..."

Constraints: "Always use superpowers. Use Context7 for new libraries. Use Node 20 (do not use 24). Work in main branch for this pet project."

This file limits the scope and hallucinations.

What's bad about Claude Code?

It still hallucinates. If it writes a bug, it learns from that bug in the context window and keeps iterating on it.

Solution: If it gets stuck looping on a fix, stop. Clear the memory (/clear or restart).

Tip: Use a different model (like Gemini CLI or OpenAI Codex) to analyze the bug. Ask Claude to "Explain the bug, I'm giving this report to someone else." Take that explanation, feed it to the other model, get a fix, and bring it back.

Q: Do you use Cloud Code with other providers (like Gemini)?

A: I stick to Claude Code mainly because of the pricing (currently significantly cheaper/unlimited compared to token costs elsewhere) and the product experience. Gemini 3 is great for research (1M context window), but the CLI tool is poor compared to Claude's.

Q: How do you handle multiple tasks?

A: I usually run multiple tabs/terminals. I might have 3 different projects open. I know people who run dozens of containers with "dangerously skip permissions" enabled to let it churn through backlogs, but that produces text output only.

Q: Have you used it on large codebases?

A: Yes. You can guide it by tagging specific folders (e.g., @api @models) to limit the scope. For very large projects, it helps to point it to relevant directories so it doesn't get lost scanning everything.

Q: Performance issues/Slowness?

A: Yes, especially when new versions release or services go down (like the recent Anthropic outage). Sometimes it degrades for a week. The worst is when it hallucinates a bug and then treats that bug as truth for future context. You have to be ready to restart.

Q: Security and Privacy?

A: If you have strict compliance, OpenAI offers Zero Data Retention agreements (Enterprise). Anthropic is less clear on public guarantees for this tool yet. Some companies run local models (like Qwen) with open-source CLIs if they absolutely cannot use cloud models, but the quality is lower.

Q: Cost if prices increase?

A: Even if it goes to $1,000/month, it's worth it. It does the work of a developer.

Closing:

I will create a repository with the "superpowers" agents and my CLAUDE.md templates so you can use these practices. The main takeaway is: Limit the scope, force TDD/planning, and treat the AI as a junior developer that needs strict architectural guidance.

Ten practices to reduce incident escalations from on-call teams to developers.

The OpenTelemetry Demo shows 30–45% of code is observability instrumentation. Plan your development timelines accordingly.

A layer-by-layer checklist for observability — from user experience monitoring to infrastructure and user feedback.